I Got question in Mind How Google Drive, Dropbox,

Skydrive work then I search about storage combination methood. One of

my friend told some Technology used by Amazon S3 .He Just Told me about

GlusterFS.

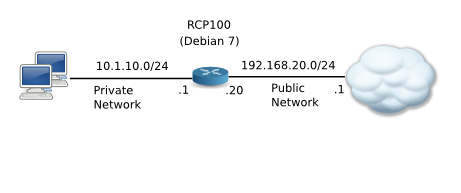

GlusterFS is an open source, distributed file system capable of scaling to several petabytes (actually, 72 brontobytes!) and handling thousands of clients. GlusterFS clusters together storage building blocks over Infiniband RDMA or TCP/IP interconnect, aggregating disk and memory resources and managing data in a single global namespace. GlusterFS is based on a stackable user space design and can deliver exceptional performance for diverse workloads.

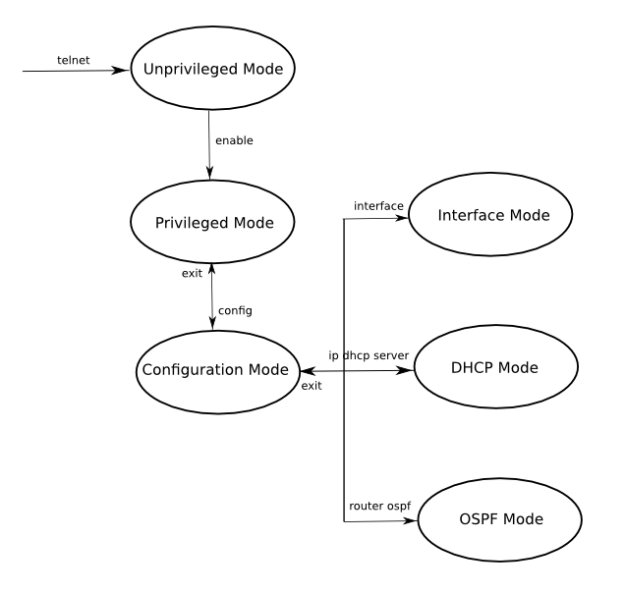

Figure 1. GlusterFS – One Common Mount Point

GlusterFS supports standard clients running standard applications over any standard IP network. Figure 1, above, illustrates how users can access application data and files in a Global namespace using a variety of standard protocols.

No longer are users locked into costly, monolithic, legacy storage platforms. GlusterFS gives users the ability to deploy scale-out, virtualized storage – scaling from terabytes to petabytes in a centrally managed and commoditized pool of storage.

Attributes of GlusterFS include:

Please note that this kind of storage (distributed storage) doesn’t provide any high-availability features, as would be the case with replicated storage.

vi /etc/hosts

First we import the GPG keys for software packages:

rpm –import /etc/pki/rpm-gpg/RPM-GPG-KEY*

Then we enable the EPEL6 repository on our CentOS systems:

rpm –import https://fedoraproject.org/static/0608B895.txt

cd /tmp

wget http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-7.noarch.rpm

rpm -ivh epel-release-6-7.noarch.rpm

yum install yum-priorities

Edit /etc/yum.repos.d/epel.repo…

vi /etc/yum.repos.d/epel.repo

… and add the line priority=10 to the [epel] section:

GlusterFS is available as a package for EPEL, therefore we can install it as follows:

yum install glusterfs-server

Create the system startup links for the Gluster daemon and start it:

chkconfig –levels 235 glusterd on

/etc/init.d/glusterd start

The command

glusterfsd –version

should now show the GlusterFS version that you’ve just installed (3.2.7 in this case):

[root@server1 ~]# glusterfsd –version

glusterfs 3.2.7 built on Jun 11 2012 13:22:28

Repository revision: git://git.gluster.com/glusterfs.git

Copyright (c) 2006-2011 Gluster Inc. <http://www.gluster.com>

GlusterFS comes with ABSOLUTELY NO WARRANTY.

You may redistribute copies of GlusterFS under the terms of the GNU General Public License.

[root@server1 ~]#

If you use a firewall, ensure that TCP ports 111, 24007, 24008, 24009-(24009 + number of bricks across all volumes) are open on server1.example.com, server2.example.com,server3.example.com, and server4.example.com.

Next we must add server2.example.com, server3.example.com, and server4.example.com to the trusted storage pool (please note that I’m running all GlusterFS configuration commands from server1.example.com, but you can as well run them from server2.example.com or server3.example.com or server4.example.com because the configuration is repliacted between the GlusterFS nodes – just make sure you use the correct hostnames or IP addresses):

server1.example.com:

On server1.example.com, run

gluster peer probe server2.example.com

gluster peer probe server3.example.com

gluster peer probe server4.example.com

Output should be as follows:

[root@server1 ~]# gluster peer probe server2.example.com

Probe successful

[root@server1 ~]#

The status of the trusted storage pool should now be similar to this:

gluster peer status

[root@server1 ~]# gluster peer status

Number of Peers: 3

Hostname: server2.example.com

Uuid: da79c994-eaf1-4c1c-a136-f8b273fb0c98

State: Peer in Cluster (Connected)

Hostname: server3.example.com

Uuid: 3e79bd9f-a4d5-4373-88e1-40f12861dcdd

State: Peer in Cluster (Connected)

Hostname: server4.example.com

Uuid: c6215943-00f3-492f-9b69-3aa534c1d8f3

State: Peer in Cluster (Connected)

[root@server1 ~]#

Next we create the distributed share named testvol on server1.example.com, server2.example.com, server3.example.com, and server4.example.com in the /data directory (this will be created if it doesn’t exist):

gluster volume create testvol transport tcp server1.example.com:/data server2.example.com:/data server3.example.com:/data server4.example.com:/data

[root@server1 ~]# gluster volume create testvol transport tcp server1.example.com:/data server2.example.com:/data server3.example.com:/data server4.example.com:/data

Creation of volume testvol has been successful. Please start the volume to access data.

[root@server1 ~]#

Start the volume:

gluster volume start testvol

It is possible that the above command tells you that the action was not successful:

[root@server1 ~]# gluster volume start testvol

Starting volume testvol has been unsuccessful

[root@server1 ~]#

In this case you should check the output of…

server1.example.com/server2.example.com/server3.example.com/server4.example.com:

netstat -tap | grep glusterfsd

on both servers.

If you get output like this…

[root@server1 ~]# netstat -tap | grep glusterfsd

tcp 0 0 *:24009 *:* LISTEN 1365/glusterfsd

tcp 0 0 localhost:1023 localhost:24007 ESTABLISHED 1365/glusterfsd

tcp 0 0 server1.example.com:24009 server1.example.com:1023 ESTABLISHED 1365/glusterfsd

[root@server1 ~]#

… everything is fine, but if you don’t get any output…

[root@server2 ~]# netstat -tap | grep glusterfsd

[root@server2 ~]#

[root@server3 ~]# netstat -tap | grep glusterfsd

[root@server3 ~]#

[root@server4 ~]# netstat -tap | grep glusterfsd

[root@server4 ~]#

… restart the GlusterFS daemon on the corresponding server (server2.example.com, server3.example.com, and server4.example.com in this case):

server2.example.com/server3.example.com/server4.example.com:

/etc/init.d/glusterfsd restart

Then check the output of…

netstat -tap | grep glusterfsd

… again on these servers – it should now look like this:

[root@server2 ~]# netstat -tap | grep glusterfsd

tcp 0 0 *:24009 *:* LISTEN 1152/glusterfsd

tcp 0 0 localhost.localdom:1018 localhost.localdo:24007 ESTABLISHED 1152/glusterfsd

[root@server2 ~]#

[root@server3 ~]# netstat -tap | grep glusterfsd

tcp 0 0 *:24009 *:* LISTEN 1311/glusterfsd

tcp 0 0 localhost.localdom:1018 localhost.localdo:24007 ESTABLISHED 1311/glusterfsd

[root@server3 ~]#

[root@server4 ~]# netstat -tap | grep glusterfsd

tcp 0 0 *:24009 *:* LISTEN 1297/glusterfsd

tcp 0 0 localhost.localdom:1019 localhost.localdo:24007 ESTABLISHED 1297/glusterfsd

[root@server4 ~]#

Now back to server1.example.com:

server1.example.com:

You can check the status of the volume with the command

gluster volume info

[root@server1 ~]# gluster volume info

Volume Name: testvol

Type: Distribute

Status: Started

Number of Bricks: 4

Transport-type: tcp

Bricks:

Brick1: server1.example.com:/data

Brick2: server2.example.com:/data

Brick3: server3.example.com:/data

Brick4: server4.example.com:/data

[root@server1 ~]#

By default, all clients can connect to the volume. If you want to grant access to client1.example.com (= 192.168.0.104) only, run:

gluster volume set testvol auth.allow 192.168.0.104

Please note that it is possible to use wildcards for the IP addresses (like 192.168.*) and that you can specify multiple IP addresses separated by comma (e.g.192.168.0.104,192.168.0.105).

The volume info should now show the updated status:

gluster volume info

[root@server1 ~]# gluster volume info

Volume Name: testvol

Type: Distribute

Status: Started

Number of Bricks: 4

Transport-type: tcp

Bricks:

Brick1: server1.example.com:/data

Brick2: server2.example.com:/data

Brick3: server3.example.com:/data

Brick4: server4.example.com:/data

Options Reconfigured:

auth.allow: 192.168.0.104

[root@server1 ~]#

lient1.example.com:

On the client, we can install the GlusterFS client as follows:

yum install glusterfs-client

Then we create the following directory:

mkdir /mnt/glusterfs

That’s it! Now we can mount the GlusterFS filesystem to /mnt/glusterfs with the following command:

mount.glusterfs server1.example.com:/testvol /mnt/glusterfs

(Instead of server1.example.com you can as well use server2.example.com or server3.example.com or server4.example.com in the above command!)

You should now see the new share in the outputs of…

mount

[root@client1 ~]# mount

/dev/mapper/vg_client1-LogVol00 on / type ext4 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

tmpfs on /dev/shm type tmpfs (rw)

/dev/sda1 on /boot type ext4 (rw)

none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

server1.example.com:/testvol on /mnt/glusterfs type fuse.glusterfs (rw,allow_other,default_permissions,max_read=131072)

[root@client1 ~]#

… and…

df -h

[root@client1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_client1-LogVol00

9.7G 1.7G 7.5G 19% /

tmpfs 499M 0 499M 0% /dev/shm

/dev/sda1 504M 39M 440M 9% /boot

server1.example.com:/testvol

116G 4.2G 106G 4% /mnt/glusterfs

[root@client1 ~]#

Instead of mounting the GlusterFS share manually on the client, you could modify /etc/fstab so that the share gets mounted automatically when the client boots.

Open /etc/fstab and append the following line:

vi /etc/fstab

To test if your modified /etc/fstab is working, reboot the client:

reboot

After the reboot, you should find the share in the outputs of…

f -h

… and…

mount

client1.example.com:

touch /mnt/glusterfs/test1

touch /mnt/glusterfs/test2

touch /mnt/glusterfs/test3

touch /mnt/glusterfs/test4

touch /mnt/glusterfs/test5

touch /mnt/glusterfs/test6

Now let’s check the /data directory on server1.example.com, server2.example.com, server3.example.com, and server4.example.com. You will notice that each storage node holds only a part of the files/directories that make up the GlusterFS share on the client:

server1.example.com:

ls -l /data

[root@server1 ~]# ls -l /data

total 0

-rw-r–r– 1 root root 0 2012-12-17 14:26 test1

-rw-r–r– 1 root root 0 2012-12-17 14:26 test2

-rw-r–r– 1 root root 0 2012-12-17 14:26 test5

[root@server1 ~]#

server2.example.com:

ls -l /data

[root@server2 ~]# ls -l /data

total 0

-rw-r–r– 1 root root 0 2012-12-17 14:26 test4

[root@server2 ~]#

server3.example.com:

ls -l /data

[root@server3 ~]# ls -l /data

total 0

-rw-r–r– 1 root root 0 2012-12-17 14:26 test6

[root@server3 ~]#

server4.example.com:

ls -l /data

[root@server4 ~]# ls -l /data

total 0

-rw-r–r– 1 root root 0 2012-12-17 14:26 test3

[root@server4 ~]#

GlusterFS is an open source, distributed file system capable of scaling to several petabytes (actually, 72 brontobytes!) and handling thousands of clients. GlusterFS clusters together storage building blocks over Infiniband RDMA or TCP/IP interconnect, aggregating disk and memory resources and managing data in a single global namespace. GlusterFS is based on a stackable user space design and can deliver exceptional performance for diverse workloads.

Figure 1. GlusterFS – One Common Mount Point

GlusterFS supports standard clients running standard applications over any standard IP network. Figure 1, above, illustrates how users can access application data and files in a Global namespace using a variety of standard protocols.

No longer are users locked into costly, monolithic, legacy storage platforms. GlusterFS gives users the ability to deploy scale-out, virtualized storage – scaling from terabytes to petabytes in a centrally managed and commoditized pool of storage.

Attributes of GlusterFS include:

- Scalability and Performance

- High Availability

- Global Namespace

- Elastic Hash Algorithm

- Elastic Volume Manager

- Gluster Console Manager

- Standards-based

Please note that this kind of storage (distributed storage) doesn’t provide any high-availability features, as would be the case with replicated storage.

1 Preliminary Note

In this tutorial I use five systems, four servers and a client:- server1.example.com: IP address 192.168.0.100 (server)

- server2.example.com: IP address 192.168.0.101 (server)

- server3.example.com: IP address 192.168.0.102 (server)

- server4.example.com: IP address 192.168.0.103 (server)

- client1.example.com: IP address 192.168.0.104 (client)

vi /etc/hosts

27.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 192.168.0.100 server1.example.com server1 192.168.0.101 server2.example.com server2 192.168.0.102 server3.example.com server3 192.168.0.103 server4.example.com server4 192.168.0.104 client1.example.com client1 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 (It is also possible to use IP addresses instead of hostnames in the following setup. If you prefer to use IP addresses, you don't have to care about whether the hostnames can be resolved or not.)

2 Enable Additional Repositories

server1.example.com/server2.example.com/server3.example.com/server4.example.com/client1.example.com:First we import the GPG keys for software packages:

rpm –import /etc/pki/rpm-gpg/RPM-GPG-KEY*

Then we enable the EPEL6 repository on our CentOS systems:

rpm –import https://fedoraproject.org/static/0608B895.txt

cd /tmp

wget http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-7.noarch.rpm

rpm -ivh epel-release-6-7.noarch.rpm

yum install yum-priorities

Edit /etc/yum.repos.d/epel.repo…

vi /etc/yum.repos.d/epel.repo

… and add the line priority=10 to the [epel] section:

[epel] name=Extra Packages for Enterprise Linux 6 - $basearch #baseurl=http://download.fedoraproject.org/pub/epel/6/$basearch mirrorlist=https://mirrors.fedoraproject.org/metalink?repo=epel-6&arch=$basearch failovermethod=priority enabled=1 priority=10 gpgcheck=1 gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-6 [...]

3 Setting Up The GlusterFS Servers

server1.example.com/server2.example.com/server3.example.com/server4.example.com:GlusterFS is available as a package for EPEL, therefore we can install it as follows:

yum install glusterfs-server

Create the system startup links for the Gluster daemon and start it:

chkconfig –levels 235 glusterd on

/etc/init.d/glusterd start

The command

glusterfsd –version

should now show the GlusterFS version that you’ve just installed (3.2.7 in this case):

[root@server1 ~]# glusterfsd –version

glusterfs 3.2.7 built on Jun 11 2012 13:22:28

Repository revision: git://git.gluster.com/glusterfs.git

Copyright (c) 2006-2011 Gluster Inc. <http://www.gluster.com>

GlusterFS comes with ABSOLUTELY NO WARRANTY.

You may redistribute copies of GlusterFS under the terms of the GNU General Public License.

[root@server1 ~]#

If you use a firewall, ensure that TCP ports 111, 24007, 24008, 24009-(24009 + number of bricks across all volumes) are open on server1.example.com, server2.example.com,server3.example.com, and server4.example.com.

Next we must add server2.example.com, server3.example.com, and server4.example.com to the trusted storage pool (please note that I’m running all GlusterFS configuration commands from server1.example.com, but you can as well run them from server2.example.com or server3.example.com or server4.example.com because the configuration is repliacted between the GlusterFS nodes – just make sure you use the correct hostnames or IP addresses):

server1.example.com:

On server1.example.com, run

gluster peer probe server2.example.com

gluster peer probe server3.example.com

gluster peer probe server4.example.com

Output should be as follows:

[root@server1 ~]# gluster peer probe server2.example.com

Probe successful

[root@server1 ~]#

The status of the trusted storage pool should now be similar to this:

gluster peer status

[root@server1 ~]# gluster peer status

Number of Peers: 3

Hostname: server2.example.com

Uuid: da79c994-eaf1-4c1c-a136-f8b273fb0c98

State: Peer in Cluster (Connected)

Hostname: server3.example.com

Uuid: 3e79bd9f-a4d5-4373-88e1-40f12861dcdd

State: Peer in Cluster (Connected)

Hostname: server4.example.com

Uuid: c6215943-00f3-492f-9b69-3aa534c1d8f3

State: Peer in Cluster (Connected)

[root@server1 ~]#

Next we create the distributed share named testvol on server1.example.com, server2.example.com, server3.example.com, and server4.example.com in the /data directory (this will be created if it doesn’t exist):

gluster volume create testvol transport tcp server1.example.com:/data server2.example.com:/data server3.example.com:/data server4.example.com:/data

[root@server1 ~]# gluster volume create testvol transport tcp server1.example.com:/data server2.example.com:/data server3.example.com:/data server4.example.com:/data

Creation of volume testvol has been successful. Please start the volume to access data.

[root@server1 ~]#

Start the volume:

gluster volume start testvol

It is possible that the above command tells you that the action was not successful:

[root@server1 ~]# gluster volume start testvol

Starting volume testvol has been unsuccessful

[root@server1 ~]#

In this case you should check the output of…

server1.example.com/server2.example.com/server3.example.com/server4.example.com:

netstat -tap | grep glusterfsd

on both servers.

If you get output like this…

[root@server1 ~]# netstat -tap | grep glusterfsd

tcp 0 0 *:24009 *:* LISTEN 1365/glusterfsd

tcp 0 0 localhost:1023 localhost:24007 ESTABLISHED 1365/glusterfsd

tcp 0 0 server1.example.com:24009 server1.example.com:1023 ESTABLISHED 1365/glusterfsd

[root@server1 ~]#

… everything is fine, but if you don’t get any output…

[root@server2 ~]# netstat -tap | grep glusterfsd

[root@server2 ~]#

[root@server3 ~]# netstat -tap | grep glusterfsd

[root@server3 ~]#

[root@server4 ~]# netstat -tap | grep glusterfsd

[root@server4 ~]#

… restart the GlusterFS daemon on the corresponding server (server2.example.com, server3.example.com, and server4.example.com in this case):

server2.example.com/server3.example.com/server4.example.com:

/etc/init.d/glusterfsd restart

Then check the output of…

netstat -tap | grep glusterfsd

… again on these servers – it should now look like this:

[root@server2 ~]# netstat -tap | grep glusterfsd

tcp 0 0 *:24009 *:* LISTEN 1152/glusterfsd

tcp 0 0 localhost.localdom:1018 localhost.localdo:24007 ESTABLISHED 1152/glusterfsd

[root@server2 ~]#

[root@server3 ~]# netstat -tap | grep glusterfsd

tcp 0 0 *:24009 *:* LISTEN 1311/glusterfsd

tcp 0 0 localhost.localdom:1018 localhost.localdo:24007 ESTABLISHED 1311/glusterfsd

[root@server3 ~]#

[root@server4 ~]# netstat -tap | grep glusterfsd

tcp 0 0 *:24009 *:* LISTEN 1297/glusterfsd

tcp 0 0 localhost.localdom:1019 localhost.localdo:24007 ESTABLISHED 1297/glusterfsd

[root@server4 ~]#

Now back to server1.example.com:

server1.example.com:

You can check the status of the volume with the command

gluster volume info

[root@server1 ~]# gluster volume info

Volume Name: testvol

Type: Distribute

Status: Started

Number of Bricks: 4

Transport-type: tcp

Bricks:

Brick1: server1.example.com:/data

Brick2: server2.example.com:/data

Brick3: server3.example.com:/data

Brick4: server4.example.com:/data

[root@server1 ~]#

By default, all clients can connect to the volume. If you want to grant access to client1.example.com (= 192.168.0.104) only, run:

gluster volume set testvol auth.allow 192.168.0.104

Please note that it is possible to use wildcards for the IP addresses (like 192.168.*) and that you can specify multiple IP addresses separated by comma (e.g.192.168.0.104,192.168.0.105).

The volume info should now show the updated status:

gluster volume info

[root@server1 ~]# gluster volume info

Volume Name: testvol

Type: Distribute

Status: Started

Number of Bricks: 4

Transport-type: tcp

Bricks:

Brick1: server1.example.com:/data

Brick2: server2.example.com:/data

Brick3: server3.example.com:/data

Brick4: server4.example.com:/data

Options Reconfigured:

auth.allow: 192.168.0.104

[root@server1 ~]#

lient1.example.com:

On the client, we can install the GlusterFS client as follows:

yum install glusterfs-client

Then we create the following directory:

mkdir /mnt/glusterfs

That’s it! Now we can mount the GlusterFS filesystem to /mnt/glusterfs with the following command:

mount.glusterfs server1.example.com:/testvol /mnt/glusterfs

(Instead of server1.example.com you can as well use server2.example.com or server3.example.com or server4.example.com in the above command!)

You should now see the new share in the outputs of…

mount

[root@client1 ~]# mount

/dev/mapper/vg_client1-LogVol00 on / type ext4 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

tmpfs on /dev/shm type tmpfs (rw)

/dev/sda1 on /boot type ext4 (rw)

none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

server1.example.com:/testvol on /mnt/glusterfs type fuse.glusterfs (rw,allow_other,default_permissions,max_read=131072)

[root@client1 ~]#

… and…

df -h

[root@client1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_client1-LogVol00

9.7G 1.7G 7.5G 19% /

tmpfs 499M 0 499M 0% /dev/shm

/dev/sda1 504M 39M 440M 9% /boot

server1.example.com:/testvol

116G 4.2G 106G 4% /mnt/glusterfs

[root@client1 ~]#

Instead of mounting the GlusterFS share manually on the client, you could modify /etc/fstab so that the share gets mounted automatically when the client boots.

Open /etc/fstab and append the following line:

vi /etc/fstab

[...] server1.example.com:/testvol /mnt/glusterfs glusterfs defaults,_netdev 0 0Again, instead of server1.example.com you can as well use server2.example.com or server3.example.com or server4.example.com!)

To test if your modified /etc/fstab is working, reboot the client:

reboot

After the reboot, you should find the share in the outputs of…

f -h

… and…

mount

5 Testing

Now let’s create some test files on the GlusterFS share:client1.example.com:

touch /mnt/glusterfs/test1

touch /mnt/glusterfs/test2

touch /mnt/glusterfs/test3

touch /mnt/glusterfs/test4

touch /mnt/glusterfs/test5

touch /mnt/glusterfs/test6

Now let’s check the /data directory on server1.example.com, server2.example.com, server3.example.com, and server4.example.com. You will notice that each storage node holds only a part of the files/directories that make up the GlusterFS share on the client:

server1.example.com:

ls -l /data

[root@server1 ~]# ls -l /data

total 0

-rw-r–r– 1 root root 0 2012-12-17 14:26 test1

-rw-r–r– 1 root root 0 2012-12-17 14:26 test2

-rw-r–r– 1 root root 0 2012-12-17 14:26 test5

[root@server1 ~]#

server2.example.com:

ls -l /data

[root@server2 ~]# ls -l /data

total 0

-rw-r–r– 1 root root 0 2012-12-17 14:26 test4

[root@server2 ~]#

server3.example.com:

ls -l /data

[root@server3 ~]# ls -l /data

total 0

-rw-r–r– 1 root root 0 2012-12-17 14:26 test6

[root@server3 ~]#

server4.example.com:

ls -l /data

[root@server4 ~]# ls -l /data

total 0

-rw-r–r– 1 root root 0 2012-12-17 14:26 test3

[root@server4 ~]#

Lo siento, no pude evitar esta imagen

Lo siento, no pude evitar esta imagen

![[UNSET]](http://www.linuxtechguru.com/wp-content/uploads/2013/06/UNSET.png)

![[UNSET]1](http://www.linuxtechguru.com/wp-content/uploads/2013/06/UNSET1.png)

![[UNSET]2](http://www.linuxtechguru.com/wp-content/uploads/2013/06/UNSET2.png)

![[UNSET]3](http://www.linuxtechguru.com/wp-content/uploads/2013/06/UNSET3.png)

![[UNSET]4](http://www.linuxtechguru.com/wp-content/uploads/2013/06/UNSET4.png)

![[UNSET]5](http://www.linuxtechguru.com/wp-content/uploads/2013/06/UNSET5.png)

![[UNSET]6](http://www.linuxtechguru.com/wp-content/uploads/2013/06/UNSET6.png)

![[UNSET]7](http://www.linuxtechguru.com/wp-content/uploads/2013/06/UNSET7.png)

![[UNSET]8](http://www.linuxtechguru.com/wp-content/uploads/2013/06/UNSET8.png)

![[UNSET]9](http://www.linuxtechguru.com/wp-content/uploads/2013/06/UNSET9.png)

![[UNSET]11](http://www.linuxtechguru.com/wp-content/uploads/2013/06/UNSET11.png)

![[UNSET]12](http://www.linuxtechguru.com/wp-content/uploads/2013/06/UNSET12.png)

Skype is a software application that allows you to make free phone calls

to more than 75 million people worldwide, and shockingly cheap calls to

practically everywhere else on Earth! As a result of that, Skype has

become the fastest growing service in the history of the Internet.

Recently, the company was acquired by eBay, another step forward towards

achieving the final goal of making Skype the world’s largest

communication company.Skype is easy to install and use. It allows its

users to make crystal clear calls, regardless of their location, send

instant messages, switch seamlessly between text and voice

communication, make video calls, conference calls, transfer files, call

land lines and cell phones for a fraction of the cost of a traditional

call. Skype is truly making a revolution in the way we communicate.

Skype is a software application that allows you to make free phone calls

to more than 75 million people worldwide, and shockingly cheap calls to

practically everywhere else on Earth! As a result of that, Skype has

become the fastest growing service in the history of the Internet.

Recently, the company was acquired by eBay, another step forward towards

achieving the final goal of making Skype the world’s largest

communication company.Skype is easy to install and use. It allows its

users to make crystal clear calls, regardless of their location, send

instant messages, switch seamlessly between text and voice

communication, make video calls, conference calls, transfer files, call

land lines and cell phones for a fraction of the cost of a traditional

call. Skype is truly making a revolution in the way we communicate.